Hey, I’m Yannick Weiss.

I am a Ph.D. student in the field of Human-Computer Interaction at the Ludwig-Maximilians-Universität (LMU) in Munich. My research focuses on haptic perception in extended reality scenarios, from uncovering the underlying processes of multisensory perception to creating and adapting haptic experiences for virtual and augmented environments.

I’m currently working on the research project Illusionary Surface Interfaces, where I investigate the possibilities of sensory illusions to enrich haptic experiences.

Contact: contact(at)yannick-weiss.com

Publications

2025

Yannick Weiss; Steeven Villa; Moritz Ziarko; Florian Müller

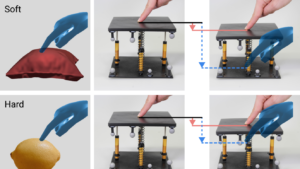

Manipulating Stiffness Perception of Compliant Objects While Pinching in Virtual Reality Proceedings ArticleOSF

In: Proceedings of the 31th ACM Symposium on Virtual Reality Software and Technology, Association for Computing Machinery, Montreal, QC, Canada, 2025, ISBN: 9798400721182.

@inproceedings{10.1145/3756884.3765988,

title = {Manipulating Stiffness Perception of Compliant Objects While Pinching in Virtual Reality},

author = {Yannick Weiss and Steeven Villa and Moritz Ziarko and Florian Müller},

url = {https://yannick-weiss.com/wp-content/uploads/2025/11/Weiss2025_Manipulating_Stiffness_Perception_of_Compliant_Objects_While_Pinching_in_VR.pdf},

doi = {10.1145/3756884.3765988},

isbn = {9798400721182},

year = {2025},

date = {2025-12-04},

urldate = {2025-11-12},

booktitle = {Proceedings of the 31th ACM Symposium on Virtual Reality Software and Technology},

publisher = {Association for Computing Machinery},

address = {Montreal, QC, Canada},

series = {VRST '25},

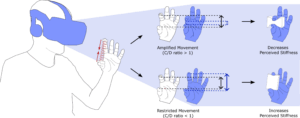

abstract = {Providing users with realistic sensations of object stiffness in virtual environments remains challenging due to the intricacies of our haptic sense. We investigate the use of a visuo-haptic illusion to alter the perceived stiffness of hand-held objects in virtual reality. We manipulate the Control-to-Display ratio of the index finger and thumb movements during pinching to make virtual objects feel softer or harder. We evaluated this approach on a variety of haptic representations and visualizations we selected through a pre-study survey (N=24). Results of our user study (N=20) demonstrate that this method effectively and reliably modifies stiffness perception, bridging gaps of 50% in physical stiffness without adversely affecting the visuo-haptic experience. Our findings offer insights into how different visual and haptic presentations impact stiffness perception, contributing to more effective and adaptable future haptic feedback systems.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Yannick Weiss; Marlene Eder; Oguzhan Cesur; Steeven Villa

Quantifying the Effect of Thermal Illusions in Virtual Reality Proceedings ArticleOSF

In: Proceedings of the 31th ACM Symposium on Virtual Reality Software and Technology, Association for Computing Machinery, Montreal, QC, Canada, 2025, ISBN: 9798400721182.

@inproceedings{10.1145/3756884.3765988b,

title = {Quantifying the Effect of Thermal Illusions in Virtual Reality},

author = {Yannick Weiss and Marlene Eder and Oguzhan Cesur and Steeven Villa},

url = {https://yannick-weiss.com/wp-content/uploads/2025/11/Weiss2025_Quantifying_the_Effect_of_Thermal_Illusions_in_Virtual_Reality.pdf},

doi = {10.1145/3756884.3766009},

isbn = {9798400721182},

year = {2025},

date = {2025-12-04},

urldate = {2025-11-12},

booktitle = {Proceedings of the 31th ACM Symposium on Virtual Reality Software and Technology},

publisher = {Association for Computing Machinery},

address = {Montreal, QC, Canada},

series = {VRST '25},

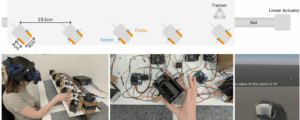

abstract = {Thermal sensations are central to how we experience the world, yet most virtual and extended reality systems fail to simulate them effectively. While hardware-based thermal displays can provide accurate temperature changes, they are often bulky, power-intensive, and restrict user mobility. Consequently, recent works have explored thermal illusions, perceptual effects that rely on cross-modal interactions, to achieve thermal experiences without physical heating or cooling. While thermal illusions have been shown to consistently alter subjective ratings, the actual extent of their effect on the perceived temperature of interacted objects remains unexplored. To address this, we contribute the findings of two user studies following psychophysical procedures. We first ordered and scaled the effects of a variety of visual and auditory cues (N=20) and subsequently quantified their isolated and combined efficacy in offsetting physical temperature changes (N=24). We found that thermal illusions elicited robust changes in subjective judgments, and auditory cues showed potential as an alternative or complementary approach to established visual techniques. However, the actual effects induced by thermal illusions were relatively small (±0.5°C) and did not consistently align with abstract ratings, suggesting a need to reconsider how future thermal illusions or experiences are designed and evaluated.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

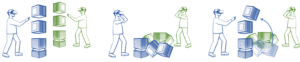

Yannick Weiss; Julian Rasch; Jonas Fischer; Florian Müller

Investigating the Effects of Haptic Illusions in Collaborative Virtual Reality Journal ArticleOSF

In: IEEE Transactions on Visualization and Computer Graphics, pp. 1-11, 2025.

@article{11190082,

title = {Investigating the Effects of Haptic Illusions in Collaborative Virtual Reality},

author = {Yannick Weiss and Julian Rasch and Jonas Fischer and Florian Müller},

url = {https://yannick-weiss.com/wp-content/uploads/2025/10/weiss2025_MultiuserHapticIllusions.pdf},

doi = {10.1109/TVCG.2025.3616760},

year = {2025},

date = {2025-10-02},

urldate = {2025-10-02},

journal = {IEEE Transactions on Visualization and Computer Graphics},

pages = {1-11},

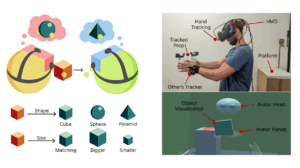

abstract = {Our sense of touch plays a crucial role in physical collaboration, yet rendering realistic haptic feedback in collaborative extended reality (XR) remains a challenge. Co-located XR systems predominantly rely on prefabricated passive props that provide high-fidelity interaction but offer limited adaptability. Haptic Illusions (HIs), which leverage multisensory integration, have proven effective in expanding haptic experiences in single-user contexts. However, their role in XR collaboration has not been explored. To examine the applicability of HIs in multi-user scenarios, we conducted an experimental user study (N=30) investigating their effect on a collaborative object handover task in virtual reality. We manipulated visual shape and size individually and analyzed their impact on users' performance, experience, and behavior. Results show that while participants adapted to the illusions by shifting sensory reliance and employing specific sensorimotor strategies, visuo-haptic mismatches reduced both performance and experience. Moreover, mismatched visualizations in asymmetric user roles negatively impacted performance. Drawing from these findings, we provide practical guidelines for incorporating HIs into collaborative XR, marking a first step toward richer haptic interactions in shared virtual spaces.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Yannick Weiss; Albrecht Schmidt; Steeven Villa

Electrophysiological Correlates for the Detection of Haptic Illusions Journal ArticleOSF

In: IEEE Transactions on Haptics, pp. 1-14, 2025.

@article{11028875,

title = {Electrophysiological Correlates for the Detection of Haptic Illusions},

author = {Yannick Weiss and Albrecht Schmidt and Steeven Villa},

url = {https://yannick-weiss.com/wp-content/uploads/2025/06/ToH_25__EEG_Correlates_for_HI.pdf},

doi = {10.1109/TOH.2025.3578076},

year = {2025},

date = {2025-06-09},

urldate = {2025-06-09},

journal = {IEEE Transactions on Haptics},

pages = {1-14},

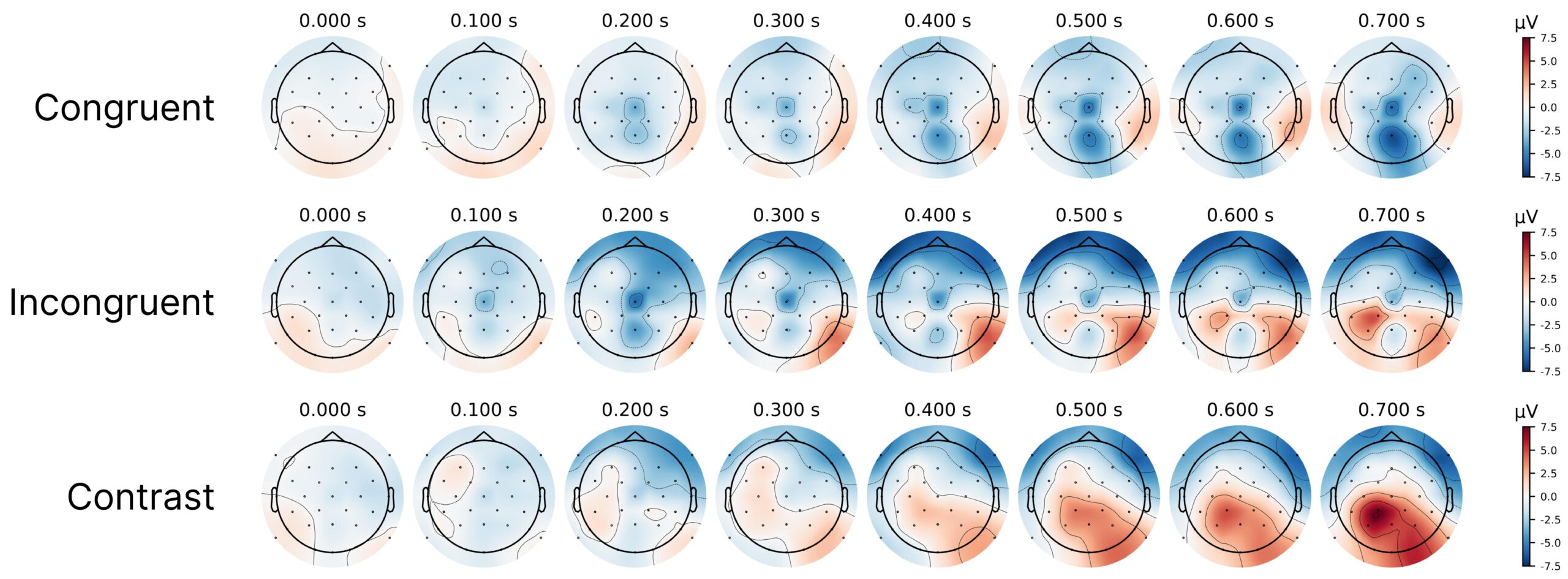

abstract = {Haptic Illusions (HIs) have emerged as a versatile method to enrich haptic experiences for computing systems, especially in virtual reality scenarios. Unlike traditional haptic rendering, HIs do not rely on complex hardware. Instead, HIs leverage multisensory interactions, which can be elicited through audio-visual channels. However, the intensity at which HIs can be effectively applied is highly subject-dependent, and typical measures only estimate generalized boundaries based on small samples. Consequently, resulting techniques compromise the experience for some users and fail to fully exploit an HI for others. We propose adapting HI intensity to the physiological responses of individual users to optimize their haptic experiences. Specifically, we investigate electroencephalographic (EEG) correlates associated with the detection of an HI's manipulations. For this, we integrated EEG with an established psychophysical protocol. Our user study (N=32) revealed distinct and separable EEG markers between detected and undetected HI manipulations. We identified contrasts in oscillatory activity between the central and parietal, as well as in frontal regions, as reliable markers for detection. Further, we trained machine learning models with simple averaged signals, which demonstrated potential for future in situ HI detection. These discoveries pave the way for adaptive HI systems that tailor elicitation to individual and contextual factors, enabling HIs to produce more convincing and reliable haptic feedback.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Steeven Villa; Finn Jacob Eliyah Krammer; Yannick Weiss; Robin Welsch; Thomas Kosch

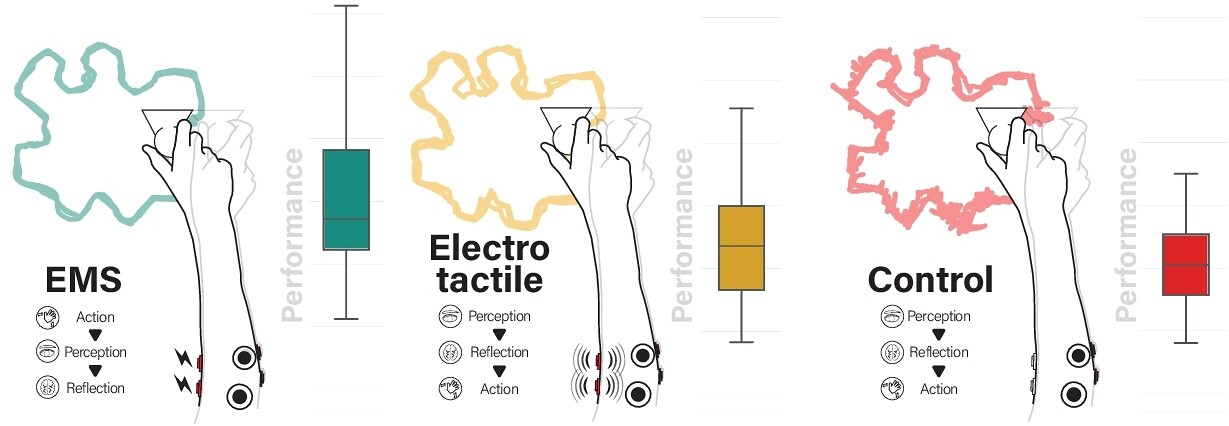

Understanding the Influence of Electrical Muscle Stimulation on Motor Learning: Enhancing Motor Learning or Disrupting Natural Progression? Proceedings Article

In: Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, Association for Computing Machinery, New York, NY, USA, 2025, ISBN: 9798400713941.

@inproceedings{10.1145/3706598.3714183,

title = {Understanding the Influence of Electrical Muscle Stimulation on Motor Learning: Enhancing Motor Learning or Disrupting Natural Progression?},

author = {Steeven Villa and Finn Jacob Eliyah Krammer and Yannick Weiss and Robin Welsch and Thomas Kosch},

url = {https://yannick-weiss.com/wp-content/uploads/2025/06/villa2025understanding.pdf},

doi = {10.1145/3706598.3714183},

isbn = {9798400713941},

year = {2025},

date = {2025-01-01},

urldate = {2025-01-01},

booktitle = {Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

series = {CHI '25},

abstract = {Electrical Muscle Stimulation (EMS) induces muscle movement through external currents, offering a novel approach to motor learning. Researchers investigated using EMS as an alternative to conventional non-movement-inducing feedback techniques, such as vibrotactile and electrotactile feedback. While EMS shows promise in areas such as dance, sports, and motor skill acquisition, neurophysiological models of motor learning conflict about the impact of externally induced movements on sensorimotor representations. This study evaluated EMS against electrotactile feedback and a control condition in a two-session experiment assessing fast learning, consolidation, and learning transfer. Our results suggest an overall positive impact of EMS in motor learning. Although traditional electrotactile feedback had a higher learning rate, EMS increased the learning plateau, as measured by a three-factor exponential decay model. This study provides empirical evidence supporting EMS as a plausible method for motor augmentation and skill transfer, contributing to understanding its role in motor learning.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

2024

Steeven Villa; Robin Neuhaus; Yannick Weiss; Marc Hassenzahl

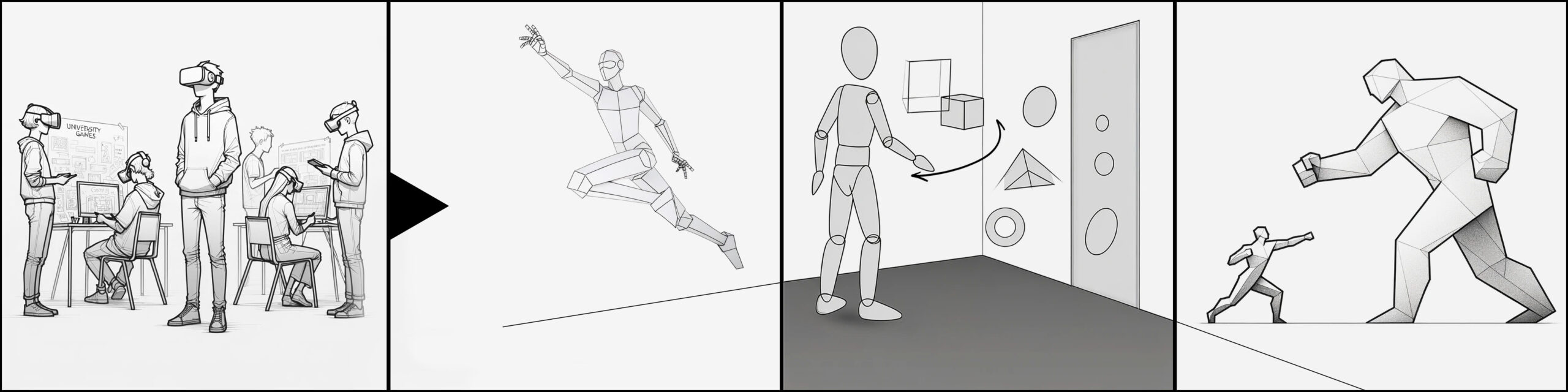

Exploring Virtual Reality as a Platform for Early-Stage Design for Human Augmentation Technologies Proceedings Article

In: Proceedings of the International Conference on Mobile and Ubiquitous Multimedia, pp. 464–466, Association for Computing Machinery, New York, NY, USA, 2024, ISBN: 9798400712838.

@inproceedings{10.1145/3701571.3703386,

title = {Exploring Virtual Reality as a Platform for Early-Stage Design for Human Augmentation Technologies},

author = {Steeven Villa and Robin Neuhaus and Yannick Weiss and Marc Hassenzahl},

doi = {10.1145/3701571.3703386},

isbn = {9798400712838},

year = {2024},

date = {2024-12-02},

urldate = {2024-12-02},

booktitle = {Proceedings of the International Conference on Mobile and Ubiquitous Multimedia},

pages = {464–466},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

series = {MUM '24},

abstract = {Human Augmentation Technologies (HATs) aim to enhance human capabilities and transform our interactions with the environment and each other. However, testing and prototyping HATs is complex and challenging. This study explores using Virtual Reality (VR) as a developmental platform for HATs within an educational framework. Through a semester-long course, students designed virtual augmentations within a VR environment. We showcase three resulting VR applications and interviewed four students about their experiences ideating and implementing virtual augmentations. Our results highlight the need for a set of guidelines for virtual augmentations based on best practices. Moreover, participants mostly opted for physical augmentations, revealing limitations of VR, i.e., simulator sickness and absence of haptic feedback. Despite challenges, the virtual augmentations received positive feedback, offering stimulating and immersive experiences. This highlights the potential of virtual augmentations as a driver for engaging experiences in VR and as a valuable tool for early HAT development and research.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Steeven Villa; Yannick Weiss; Mei Yi Lu; Moritz Ziarko; Albrecht Schmidt; Jasmin Niess

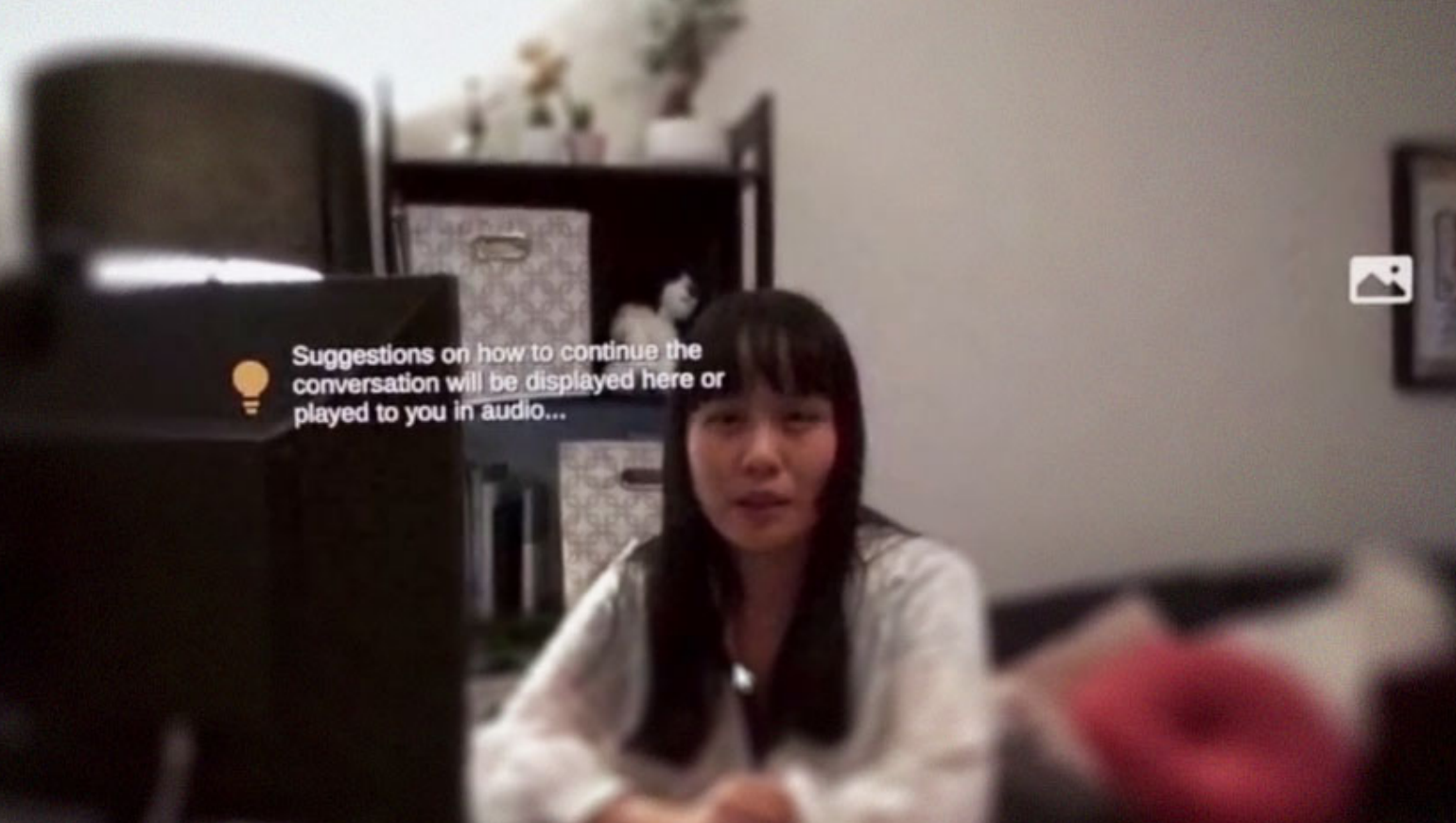

Envisioning Futures: How the Modality of AI Recommendations Impacts Conversation Flow in AR-enhanced Dialogue Proceedings Article

In: Proceedings of the 26th International Conference on Multimodal Interaction, pp. 182–193, Association for Computing Machinery, San Jose, Costa Rica, 2024, ISBN: 9798400704628.

@inproceedings{10.1145/3678957.3685731,

title = {Envisioning Futures: How the Modality of AI Recommendations Impacts Conversation Flow in AR-enhanced Dialogue},

author = {Steeven Villa and Yannick Weiss and Mei Yi Lu and Moritz Ziarko and Albrecht Schmidt and Jasmin Niess},

doi = {10.1145/3678957.3685731},

isbn = {9798400704628},

year = {2024},

date = {2024-11-04},

urldate = {2024-11-04},

booktitle = {Proceedings of the 26th International Conference on Multimodal Interaction},

pages = {182–193},

publisher = {Association for Computing Machinery},

address = {San Jose, Costa Rica},

series = {ICMI '24},

abstract = {The use of AI is becoming more common among the population every day; the use of generative AI, such as LLMs, empowers individuals by supporting daily life tasks. Yet, the user interaction with AI models is mostly constrained to chatbot interactions. However, we envision that in the near future, individuals will be able to integrate the use of these technologies into their daily activities without refocusing their attention. Consequently, we explores the impact of such integration on individuals’ conversations. In detail, this paper investigates how different modes of information presentation (visual vs. auditory) and triggers for AI action (mechanical vs. ocular) influence conversational dynamics and user experiences. We conducted a mixed-method, within-subjects study with 21 participants using a Discourse Completion Task (DCT) to observe how users develop their discourse in the presence of AI-generated suggestions. Our study examines the effects of presentation modality on response delay, response length, and response similarity to the AI prompt. The results highlight the significance of managing the balance between human and AI input in conversation, revealing insights into user experience factors with AI assistance in face-to-face conversational settings.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

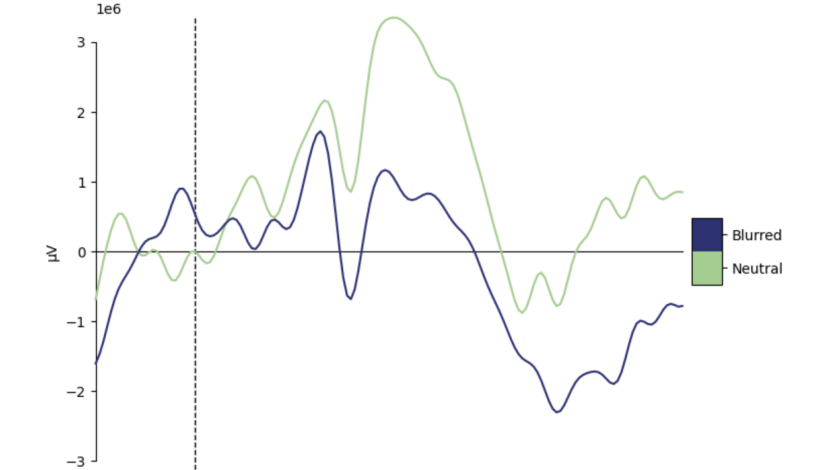

Francesco Chiossi; Yannick Weiss; Thomas Steinbrecher; Christian Mai; Thomas Kosch

Mind the Visual Discomfort: Assessing Event-Related Potentials as Indicators for Visual Strain in Head-Mounted Displays Proceedings ArticleOSF

In: 2024 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), IEEE, New York, NY, USA, 2024.

@inproceedings{Chiossi2024,

title = {Mind the Visual Discomfort: Assessing Event-Related Potentials as Indicators for Visual Strain in Head-Mounted Displays},

author = {Francesco Chiossi and Yannick Weiss and Thomas Steinbrecher and Christian Mai and Thomas Kosch},

url = {https://arxiv.org/pdf/2407.18548},

doi = {10.1109/ISMAR62088.2024.00029},

year = {2024},

date = {2024-10-21},

urldate = {2024-10-21},

booktitle = {2024 IEEE International Symposium on Mixed and Augmented Reality (ISMAR)},

publisher = {IEEE},

address = {New York, NY, USA},

abstract = {When using Head-Mounted Displays (HMDs), users may not always notice or report visual discomfort by blurred vision through unadjusted lenses, motion sickness, and increased eye strain. Current measures for visual discomfort rely on users' self-reports those susceptible to subjective differences and lack of real-time insights. In this work, we investigate if Electroencephalography (EEG) can objectively measure visual discomfort by sensing Event-Related Potentials (ERPs). In a user study (N=20), we compare four different levels of Gaussian blur in a user study while measuring ERPs at occipito-parietal EEG electrodes. The findings reveal that specific ERP components (i.e., P1, N2, and P3) discriminated discomfort-related visual stimuli and indexed increased load on visual processing and fatigue. We conclude that time-locked brain activity can be used to evaluate visual discomfort and propose EEG-based automatic discomfort detection and prevention tools.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

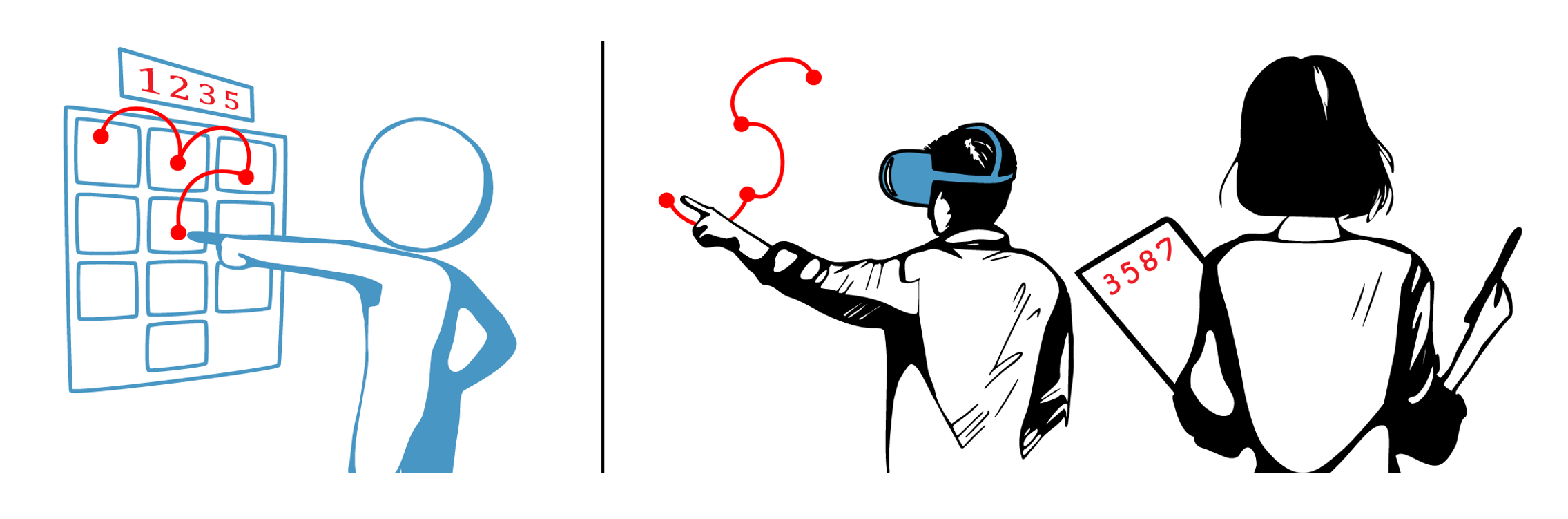

Yannick Weiss; Steeven Villa; Jesse W Grootjen; Matthias Hoppe; Yasin Kale; Florian Müller

Exploring Redirection and Shifting Techniques to Mask Hand Movements from Shoulder-Surfing Attacks during PIN Authentication in Virtual Reality Journal Article

In: Proc. ACM Hum.-Comput. Interact., vol. 8, no. MHCI, 2024.

@article{10.1145/3676502,

title = {Exploring Redirection and Shifting Techniques to Mask Hand Movements from Shoulder-Surfing Attacks during PIN Authentication in Virtual Reality},

author = { Yannick Weiss and Steeven Villa and Jesse W Grootjen and Matthias Hoppe and Yasin Kale and Florian Müller},

url = {https://yannick-weiss.com/wp-content/uploads/2024/10/MobileHCI_24__Redirected_Authentication_in_VR.pdf},

doi = {10.1145/3676502},

year = {2024},

date = {2024-09-01},

urldate = {2024-09-01},

journal = {Proc. ACM Hum.-Comput. Interact.},

volume = {8},

number = {MHCI},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

abstract = {The proliferation of mobile Virtual Reality (VR) headsets shifts our interaction with virtual worlds beyond our living rooms into shared spaces. Consequently, we are entrusting more and more personal data to these devices, calling for strong security measures and authentication. However, the standard authentication method of such devices - entering PINs via virtual keyboards - is vulnerable to shoulder-surfing, as movements to enter keys can be monitored by an unnoticed observer. To address this, we evaluated masking techniques to obscure VR users' input during PIN authentication by diverting their hand movements. Through two experimental studies, we demonstrate that these methods increase users' security against shoulder-surfing attacks from observers without excessively impacting their experience and performance. With these discoveries, we aim to enhance the security of future VR authentication without disrupting the virtual experience or necessitating additional hardware or training of users.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

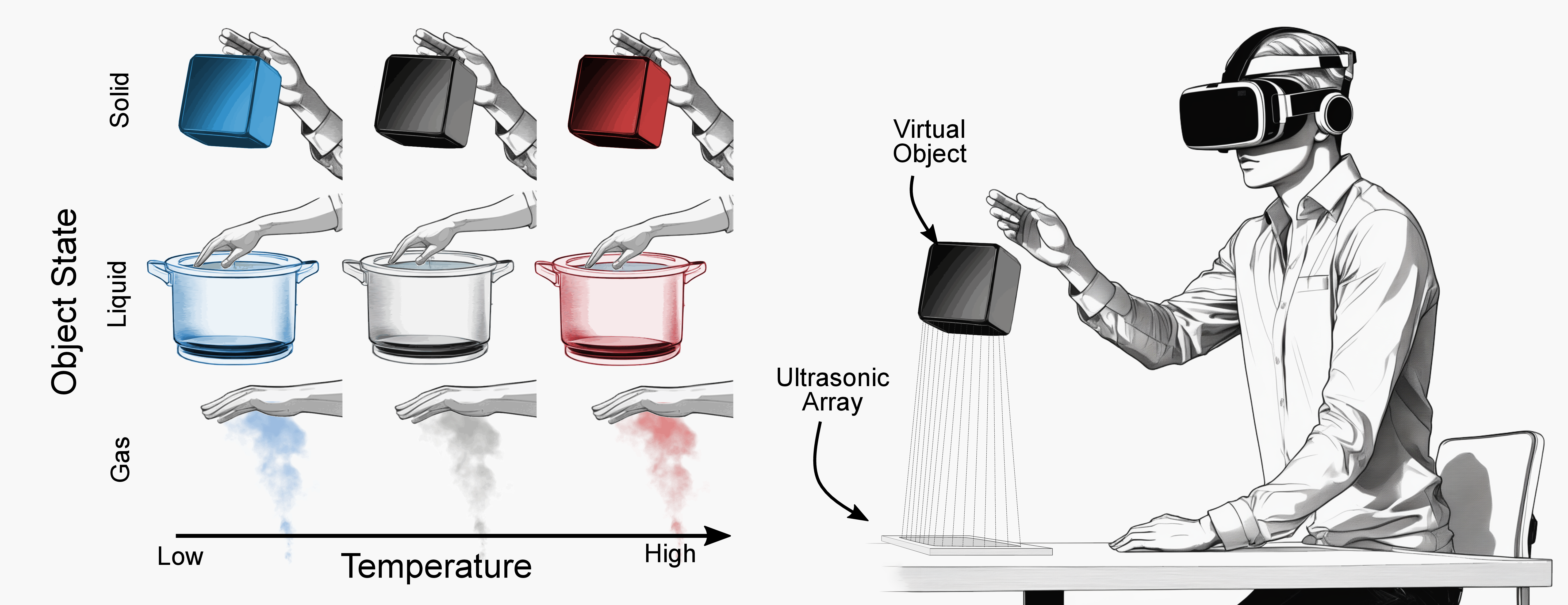

Steeven Villa; Yannick Weiss; Niklas Hirsch; Alexander Wiethoff

An Examination of Ultrasound Mid-air Haptics for Enhanced Material and Temperature Perception in Virtual Environments Journal Article

In: Proc. ACM Hum.-Comput. Interact., vol. 8, no. MHCI, 2024.

@article{Villa2024,

title = {An Examination of Ultrasound Mid-air Haptics for Enhanced Material and Temperature Perception in Virtual Environments},

author = {Steeven Villa and Yannick Weiss and Niklas Hirsch and Alexander Wiethoff},

url = {https://doi.org/10.1145/3676488},

doi = {10.1145/3676488},

year = {2024},

date = {2024-09-01},

urldate = {2024-09-01},

journal = {Proc. ACM Hum.-Comput. Interact.},

volume = {8},

number = {MHCI},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

abstract = {Rendering realistic tactile sensations of virtual objects remains a challenge in VR. While haptic interfaces have advanced, particularly with phased arrays, their ability to create realistic object properties like state and temperature remains unclear. This study investigates the potential of Ultrasound Mid-air Haptics (UMH) for enhancing the perceived congruency of virtual objects. In a user study with 30 participants, we assessed how UMH impacts the perceived material state and temperature of virtual objects. We also analyzed EEG data to understand how participants integrate UMH information physiologically. Our results reveal that UMH significantly enhances the perceived congruency of virtual objects, particularly for solid objects, reducing the feeling of mismatch between visual and tactile feedback. Additionally, UMH consistently increases the perceived temperature of virtual objects. These findings offer valuable insights for haptic designers, demonstrating UMH's potential for creating more immersive tactile experiences in VR by addressing key limitations in current haptic technologies.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Carolin Stellmacher; Florian Mathis; Yannick Weiss; Meagan B. Loerakker; Nadine Wagener; Johannes Schöning

Exploring Mobile Devices as Haptic Interfaces for Mixed Reality Proceedings Article

In: Proceedings of the CHI Conference on Human Factors in Computing Systems, pp. 1-17, Association for Computing Machinery, New York, NY, USA, 2024, ISBN: 9798400703300.

@inproceedings{10.1145/3613904.3642176,

title = {Exploring Mobile Devices as Haptic Interfaces for Mixed Reality},

author = {Carolin Stellmacher and Florian Mathis and Yannick Weiss and Meagan B. Loerakker and Nadine Wagener and Johannes Schöning},

url = {https://doi.org/10.1145/3613904.3642176},

doi = {10.1145/3613904.3642176},

isbn = {9798400703300},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

booktitle = {Proceedings of the CHI Conference on Human Factors in Computing Systems},

pages = {1-17},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

series = {CHI '24},

abstract = {Dedicated handheld controllers facilitate haptic experiences of virtual objects in mixed reality (MR). However, as mobile MR becomes more prevalent, we observe the emergence of controller-free MR interactions. To retain immersive haptic experiences, we explore the use of mobile devices as a substitute for specialised MR controller. In an exploratory gesture elicitation study (n = 18), we examined users’ (1) intuitive hand gestures performed with prospective mobile devices and (2) preferences for real-time haptic feedback when exploring haptic object properties. Our results reveal three haptic exploration modes for the mobile device, as an object, hand substitute, or as an additional tool, and emphasise the benefits of incorporating the device’s unique physical features into the object interaction. This work expands the design possibilities using mobile devices for tangible object interaction, guiding the future design of mobile devices for haptic MR experiences.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Julian Rasch; Florian Perzl; Yannick Weiss; Florian Müller

Just Undo It: Exploring Undo Mechanics in Multi-User Virtual Reality Proceedings Article

In: Proceedings of the CHI Conference on Human Factors in Computing Systems, pp. 1–14, Association for Computing Machinery, New York, NY, USA, 2024, ISBN: 9798400703300.

@inproceedings{10.1145/3613904.3642864,

title = {Just Undo It: Exploring Undo Mechanics in Multi-User Virtual Reality},

author = {Julian Rasch and Florian Perzl and Yannick Weiss and Florian Müller},

url = {https://doi.org/10.1145/3613904.3642864},

doi = {10.1145/3613904.3642864},

isbn = {9798400703300},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

booktitle = {Proceedings of the CHI Conference on Human Factors in Computing Systems},

pages = {1–14},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

series = {CHI '24},

abstract = {With the proliferation of VR and a metaverse on the horizon, many multi-user activities are migrating to the VR world, calling for effective collaboration support. As one key feature, traditional collaborative systems provide users with undo mechanics to reverse errors and other unwanted changes. While undo has been extensively researched in this domain and is now considered industry standard, it is strikingly absent for VR systems in research and industry. This work addresses this research gap by exploring different undo techniques for basic object manipulation in different collaboration modes in VR. We conducted a study involving 32 participants organized in teams of two. Here, we studied users’ performance and preferences in a tower stacking task, varying the available undo techniques and their mode of collaboration. The results suggest that users desire and use undo in VR and that the choice of the undo technique impacts users’ performance and social connection.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Marco Kurzweg*; Yannick Weiss*; Marc O. Ernst; Albrecht Schmidt; Katrin Wolf

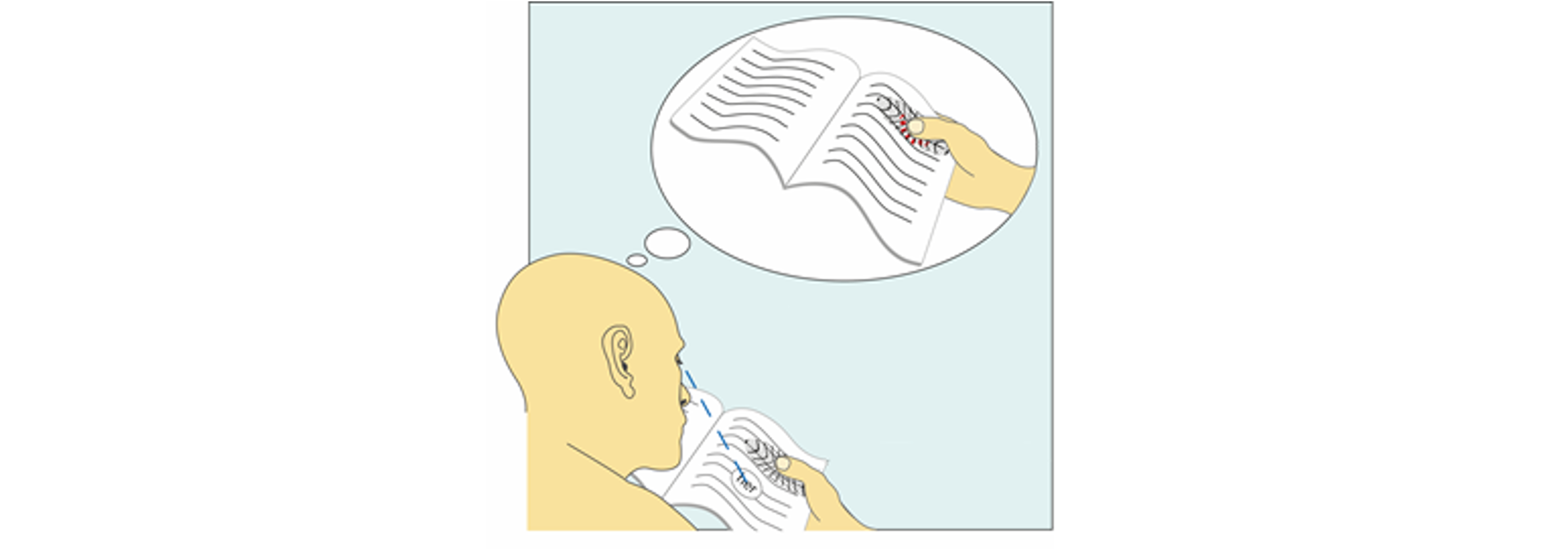

A Survey on Haptic Feedback through Sensory Illusions in Interactive Systems Journal Article

In: ACM Comput. Surv., vol. 56, iss. 8, no. 194, pp. 1-39, 2024, ISSN: 0360-0300, (*Both authors contributed equally to this work).

@article{10.1145/3648353,

title = {A Survey on Haptic Feedback through Sensory Illusions in Interactive Systems},

author = {Marco Kurzweg* and Yannick Weiss* and Marc O. Ernst and Albrecht Schmidt and Katrin Wolf},

url = {https://doi.org/10.1145/3648353},

doi = {10.1145/3648353},

issn = {0360-0300},

year = {2024},

date = {2024-04-10},

urldate = {2024-04-10},

journal = {ACM Comput. Surv.},

volume = {56},

number = {194},

issue = {8},

pages = {1-39},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

abstract = {A growing body of work in human-computer interaction (HCI), particularly work on haptic feedback and haptic displays, relies on sensory illusions, which is a phenomenon investigated in perception research. However, an overview of which illusions are prevalent in HCI for generating haptic feedback in computing systems and which remain underrepresented, as well as the rationales and possible undiscovered potentials therein, have not yet been provided. Existing surveys on human-computer interfaces using sensory illusions are not only outdated but, more importantly, they do not consider literature across disciplines, namely perception research and HCI. This paper provides a systematic literature review (SLR) of haptic feedback generated by sensory illusions. By reporting and discussing the findings of 90 publications, we provide an overview of how sensory illusions can be used and adapted to produce haptic feedback and how they are implemented and evaluated in HCI. We moreover identify current trends and research gaps and discuss ideas for possible research directions worth investigating.},

note = {*Both authors contributed equally to this work},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

2023

Yannick Weiss; Steeven Villa; Albrecht Schmidt; Sven Mayer; Florian Müller

Using Pseudo-Stiffness to Enrich the Haptic Experience in Virtual Reality Proceedings Article

In: Proceedings of the 42nd ACM Conference on Human Factors in Computing Systems, Association for Computing Machinery, New York, NY, USA, 2023.

@inproceedings{weiss2023using,

title = {Using Pseudo-Stiffness to Enrich the Haptic Experience in Virtual Reality},

author = {Yannick Weiss and Steeven Villa and Albrecht Schmidt and Sven Mayer and Florian Müller},

url = {https://dx.doi.org/10.1145/3544548.3581223

https://yannick-weiss.com/wp-content/uploads/2023/04/Weiss2023_PseudoStiffness.pdf},

doi = {10.1145/3544548.3581223},

year = {2023},

date = {2023-04-23},

urldate = {2023-04-23},

booktitle = {Proceedings of the 42nd ACM Conference on Human Factors in Computing Systems},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

series = {CHI '23},

abstract = {Providing users with a haptic sensation of the hardness and softness of objects in virtual reality is an open challenge. While physical props and haptic devices help, their haptic properties do not allow for dynamic adjustments. To overcome this limitation, we present a novel technique for changing the perceived stiffness of objects based on a visuo-haptic illusion. We achieved this by manipulating the hands' Control-to-Display (C/D) ratio in virtual reality while pressing down on an object with fixed stiffness. In the first study (N=12), we determine the detection thresholds of the illusion. Our results show that we can exploit a C/D ratio from 0.7 to 3.5 without user detection. In the second study (N=12), we analyze the illusion's impact on the perceived stiffness. Our results show that participants perceive the objects to be up to 28.1% softer and 8.9% stiffer, allowing for various haptic applications in virtual reality.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Jakob Carl Uhl; Helmut Schrom-Feiertag; Georg Regal; Linda Hirsch; Yannick Weiss; Manfred Tscheligi

When Realities Interweave: Exploring the Design Space of Immersive Tangible XR Proceedings ArticleWorkshop

In: Proceedings of the Seventeenth International Conference on Tangible, Embedded, and Embodied Interaction, Association for Computing Machinery, Warsaw, Poland, 2023, ISBN: 9781450399777.

@inproceedings{10.1145/3569009.3571843,

title = {When Realities Interweave: Exploring the Design Space of Immersive Tangible XR},

author = {Jakob Carl Uhl and Helmut Schrom-Feiertag and Georg Regal and Linda Hirsch and Yannick Weiss and Manfred Tscheligi},

doi = {10.1145/3569009.3571843},

isbn = {9781450399777},

year = {2023},

date = {2023-02-26},

urldate = {2023-02-26},

booktitle = {Proceedings of the Seventeenth International Conference on Tangible, Embedded, and Embodied Interaction},

publisher = {Association for Computing Machinery},

address = {Warsaw, Poland},

series = {TEI '23},

abstract = {Tangible devices and interaction in Extended Reality (XR) increase immersion and enable users to perform tasks more intuitively, accurately and joyfully across the reality-virtuality continuum. Upon reviewing the literature, we noticed no clear trend for a publication venue, as well as no standard in evaluating the effects of tangible XR. To position the topic of tangible XR in the TEI community, we propose a hands-on studio, where participants will bring in their own ideas for tangible XR from their application fields, and develop prototypes with the cutting-edge technology and a selection of virtual assets provided. Additionally, we will collectively reflect upon evaluation methods on tangible XR, and aim to find a consensus of a core evaluation suite. With this, we aim to foster a practical understanding and spark new developments in tangible XR and its use cases within the TEI community.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Marco Kurzweg; Simon Linke; Yannick Weiss; Maximilian Letter; Albrecht Schmidt; Katrin Wolf

Assignment of a Vibration to a Graphical Object Induced by Resonant Frequency Proceedings Article

In: Nocera, José Äbdelnour; Lárusdóttir, Marta Kristín; Petrie, Helen; Piccinno, Antonio; Winckler, Marco" (Ed.): Human-Computer Interaction — INTERACT 2023, pp. 523–545, Springer Nature Switzerland, Cham, 2023, ISBN: 978-3-031-42280-5.

@inproceedings{10.1007/978-3-031-42280-5_33,

title = {Assignment of a Vibration to a Graphical Object Induced by Resonant Frequency},

author = {Marco Kurzweg and Simon Linke and Yannick Weiss and Maximilian Letter and Albrecht Schmidt and Katrin Wolf},

editor = {José Äbdelnour Nocera and Marta Kristín Lárusdóttir and Helen Petrie and Antonio Piccinno and Marco" Winckler},

doi = {10.1007/978-3-031-42280-5_33},

isbn = {978-3-031-42280-5},

year = {2023},

date = {2023-01-01},

urldate = {2023-01-01},

booktitle = {Human-Computer Interaction -- INTERACT 2023},

pages = {523--545},

publisher = {Springer Nature Switzerland},

address = {Cham},

abstract = {This work aims to provide tactile feedback when touching elements on everyday surfaces using their resonant frequencies. We used a remote speaker to bring a thin wooden surface into vibration for providing haptic feedback when a small graphical fly glued on the board was touched. Participants assigned the vibration to the fly instead of the board it was glued on. We systematically explored when that assignment illusion works best. The results indicate that additional sound, as well as vibration, lasting as long as the touch, are essential factors for having an assignment of the haptic feedback to the touched graphical object. With this approach, we contribute to ubiquitous and calm computing by showing that resonant frequency can provide vibrotactile feedback for images on thin everyday surfaces using only a minimum of hardware.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

2022

Katrin Wolf; Marco Kurzweg; Yannick Weiss; Stephen Brewster; Albrecht Schmidt

Visuo-Haptic Interaction Proceedings ArticleWorkshop

In: Proceedings of the 2022 International Conference on Advanced Visual Interfaces, Association for Computing Machinery, Frascati, Rome, Italy, 2022, ISBN: 9781450397193.

@inproceedings{10.1145/3531073.3535260,

title = {Visuo-Haptic Interaction},

author = {Katrin Wolf and Marco Kurzweg and Yannick Weiss and Stephen Brewster and Albrecht Schmidt},

url = {https://illusionui.org/workshop/},

doi = {10.1145/3531073.3535260},

isbn = {9781450397193},

year = {2022},

date = {2022-01-01},

urldate = {2022-01-01},

booktitle = {Proceedings of the 2022 International Conference on Advanced Visual Interfaces},

publisher = {Association for Computing Machinery},

address = {Frascati, Rome, Italy},

series = {AVI 2022},

abstract = {While traditional interfaces in human-computer interaction mainly rely on vision and audio, haptics becomes more and more important. Haptics cannot only increase the user experience and make technology more immersive, it can also transmit information that is hard to interpret only through vision and audio, such as the softness of a surface or other material properties. In this workshop, we aim at discussing how we could interact with technology if haptics is strongly supported and which novel research areas could emerge.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

2019

Daniel Hepperle; Yannick Weiss; Andreas Siess; Matthias Wölfel

2D, 3D or speech? A case study on which user interface is preferable for what kind of object interaction in immersive virtual reality Journal Article

In: Computers & Graphics, vol. 82, pp. 321-331, 2019, ISSN: 0097-8493.

@article{HEPPERLE2019321,

title = {2D, 3D or speech? A case study on which user interface is preferable for what kind of object interaction in immersive virtual reality},

author = {Daniel Hepperle and Yannick Weiss and Andreas Siess and Matthias Wölfel},

url = {https://www.sciencedirect.com/science/article/pii/S0097849319300974},

doi = {https://doi.org/10.1016/j.cag.2019.06.003},

issn = {0097-8493},

year = {2019},

date = {2019-08-01},

urldate = {2019-08-01},

journal = {Computers & Graphics},

volume = {82},

pages = {321-331},

abstract = {Recent developments in human machine interaction offer three principal different approaches to interact with 3D environments, namely: 2D overlays using icons, 3D interfaces resembling interactions of the real world and speech interfaces which matured in the last years and are becoming more and more popular in other context such as smartphones or smart homes. Faced with the task to select the best interaction strategy to interact with immersive environments one is left with best practice and literature. But neither offers a clear strategy and all the methods are used widely. In particular a consistent comparison providing insights on when to use what interface in immersive virtual environments is missing. In order to evaluate the relative strengths and weaknesses of each interface in relation to different tasks in immersive environments a quantitative user study has been conducted. Results showed significant differences on the interface performances according to different parameters: ease of learning (Speech and 2D are favored), uncomplicated handling (Speech), speed (Speech and 2D), overview (2D), fun (3D), comprehension (2D and 3D) and on how simple and efficient it is to handle text input (Speech).},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

2018

Yannick Weiss; Daniel Hepperle; Andreas Sieß; Matthias Wölfel

What User Interface to Use for Virtual Reality? 2D, 3D or Speech–A User Study Proceedings Article

In: 2018 International Conference on Cyberworlds (CW), pp. 50-57, IEEE , 2018.

@inproceedings{Weiss2018,

title = {What User Interface to Use for Virtual Reality? 2D, 3D or Speech–A User Study},

author = {Yannick Weiss and Daniel Hepperle and Andreas Sieß and Matthias Wölfel},

url = {https://ieeexplore.ieee.org/abstract/document/8590016},

doi = {10.1109/CW.2018.00021},

year = {2018},

date = {2018-12-27},

urldate = {2018-12-27},

booktitle = {2018 International Conference on Cyberworlds (CW)},

pages = {50-57},

publisher = {IEEE },

abstract = {In virtual reality different demands on the user interface have to be addressed than on classic screen applications. That's why established strategies from other digital media cannot be transferred unreflected and at least adaptation is required. So one of the leading questions is: which form of interface is preferable for virtual reality? Are 2D interfaces—that are mostly used in combination with mouse or touch interactions— the means of choice, although they do not use the medium's full capabilities? What about 3D interfaces that can be naturally integrated into the virtual space? And last but not least: are speech interfaces, the fastest and most natural form of human interaction/communication, which have recently established themselves in other areas (e.g. digital assistants), ready to conquer the world of virtual reality? To answer these question this work compares these three approaches based on a quantitative user study and highlights advantages and disadvantages of the respective interfaces for virtual reality applications.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Michael Braun; Sarah Theres Völkel; Gesa Wiegand; Thomas Puls; Daniel Steidl; Yannick Weiss; Florian Alt

The Smile is The New Like: Controlling Music with Facial Expressions to Minimize Driver Distraction Proceedings Article

In: Proceedings of the 17th International Conference on Mobile and Ubiquitous Multimedia, pp. 383–389, Association for Computing Machinery, Cairo, Egypt, 2018, ISBN: 9781450365949.

@inproceedings{10.1145/3282894.3289729,

title = {The Smile is The New Like: Controlling Music with Facial Expressions to Minimize Driver Distraction},

author = {Michael Braun and Sarah Theres Völkel and Gesa Wiegand and Thomas Puls and Daniel Steidl and Yannick Weiss and Florian Alt},

doi = {10.1145/3282894.3289729},

isbn = {9781450365949},

year = {2018},

date = {2018-11-25},

urldate = {2018-11-25},

booktitle = {Proceedings of the 17th International Conference on Mobile and Ubiquitous Multimedia},

pages = {383–389},

publisher = {Association for Computing Machinery},

address = {Cairo, Egypt},

series = {MUM '18},

abstract = {The control of user interfaces while driving is a textbook example for driver distraction. Modern in-car interfaces are growing in complexity and visual demand, yet they need to stay simple enough to handle while driving. One common approach to solve this problem are multimodal interfaces, incorporating e.g. touch, speech, and mid-air gestures for the control of distinct features. This allows for an optimization of used cognitive resources and can relieve the driver of potential overload. We introduce a novel modality for in-car interaction: our system allows drivers to use facial expressions to control a music player.The results of a user study show that both implicit emotion recognition and explicit facial expressions are applicable for music control in cars. Subconscious emotion recognition could decrease distraction, while explicit expressions can be used as an alternative input modality. A simple smiling gesture showed good potential, e.g. to save favorite songs.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}